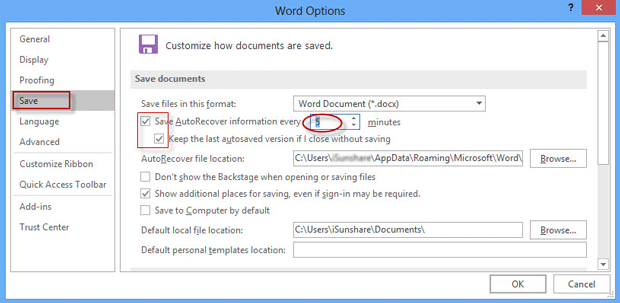

It seems that you don't know the behavior of my script and the eversave's one.īoth save every ten minutes (or more often if we want) but they keep a datetime stamped copy of these saved files. So you can see that autos save has serious risks as well. Suppose you then decide that you made a huge mistake by deleting that material - that you really now want in the file? Since you over wrote the old file with auto save you are hosed. Last, auto save is very dangerous - suppose you do a big change in the file by deleting 10 pages of text and you autosave. Who do you blame for not unplugging your machine in the midst of a bad thunderstorm? Who do you blame when your hard disk fails and you don't have a good backup? Last, auto save is very dangerous - suppose you do a big change in the file by deleting 10 pages of text and you autosave. All computer users should get in the habit of saving, say after every paragraph or so when the user is comfortable over writing previous work (see below).

You might be hosed - your brilliant word smithing is gone perhaps not retrievable as you had a true brainstorm that is now lost. Suppose the power goes out after 9 minutes. Suppose you set autosave to every 10 minutes. I don't agree that auto save is mandatory and essential. Yvan KOENIG (VALLAURIS, France.) dimanche 23 août 2009 14:57:40 Zip, Gzip, Ditto are unable to pack in a format accepted by iWork. (2) if the doc must be a flatFile, the package (with its non packed index) is packed with a tool which is not one of those which I am aware of. (1) if the doc must remain a package, the index.xml file is packed (gzipped) as

#HOW TO TURN ON AUTOSAVE IN WORD 2016 MAC CODE#

Maybe there is a bug in the code compacting the package built at first.Īs I already explained, iWork builds a package then, And most often these corrupted documents are flatfiles. The number of corrupted files received exploded after the introduction of iWork '09. I guess that because sometimes I was said that the document was killed after the addition (or the removal) of a single character.

I would not be surprised if it is linked to the most common oddity: an instruction which doesn't manipulate correctly a carry when the treated data cross a page boundary. My guess is that there is a bug pushing the code to write a wrong instruction. When the Index.xml file is available, I may decipher it and rebuild the entire document but Pages is unable to do the same, simply because, somewhere among the thousands of instructions, there is one which it is unable to decipher. The corrupted files, at least those which I received, are always readable with standard tools, at least with Hexadecimal editors. The operating system is fooled by the wrong descriptor available in its HD's table of contents. Most often, a document corrupted by a power shortage is not readable with current tools like TextEdit or TextWrangler. Every time the response is: no, it was on a single user system. But when I receive such ones, I always ask the owner if the doc was stored on a server where it may be used by several machines.

I know a way to get documents without Index.xml file.

In my own use I never got a corrupted document but, contrarily to what you often wrote, when I receive a corrupted file, I don't state: the user made something wrong. I receive in my mailbox documents sent by users asking me to try to recover the embedded content.

0 kommentar(er)

0 kommentar(er)